In recent years the mobile health industry is booming. Companies like FitBit, Moves, Nike are leading this revolution. Essential idea behind these services is that you can measure the number of activities or calorie burn, calorie intake or food consumption and quality of your sleep. Since it is becoming a huge deal, naturally a lot of startups are taking advantage of it and trying to take advantage of this data. Some companies are providing integration points with these services and unifying data, some are just proxies to these API’s. Challenges for these types of startups is data unification, rate limits and user’s expectation of instant feedback. While working with multiple API’s, each one of them has a unique format that needs to be parsed and stored. Another issue that you have to solve is rate limiting. For example, if you want to get step count for a month and API provides this kind of data only on daily basis, API rate limits become an issue since data fetching for a month are 30 requests. And the user may hit the “sync” button more than once. If you want to increase rate limits, you would have to sign a contract with the data provider, which may be a tedious process. Also, consider the fact that user wants to see he’s steps immediately after he synchronizes with the provider. Sometimes providers love to change their API unannounced or somehow you may miss that fact.

What we had?

We had to start out with Ruby on Rails app that had some had some synchronization logic in modules that were included in ActiveRecord models. It worked, but it was pretty impossible to reason about. The code was scattered all over the place with tons of duplication.

How did we solve that problem?

Well, with a good architecture of course! The first thing that we needed to do is to cover the whole thing with integration tests, to ensure we do not break anything along the way. Now, by integration tests, I mean that we simulated work with API and our data layer and business logic layer – no UI code was touched. We recorded all request/response cycles and made sure that response data is getting parsed, unified and stored.

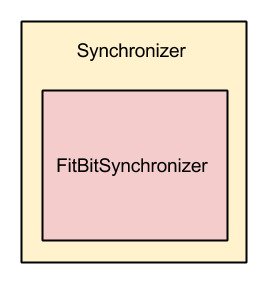

After we were confident with the percentage of test coverage we choose an architecture that had a superclass, that was responsible for storing data and asking questions to the system. All API connections, parsing of the data was handled in subclasses. It worked okay at the beginning when we had 2-3 integrations, but at some point, we discovered that testing this system requires to build or stub a lot of dependencies. Also, as you can imagine, this architecture clearly violated some of the Object Oriented best practices. Subclasses and superclass had too many responsibilities.

When we came to the conclusion that this won’t fly, we turned our attention to best practices in this area or simply patterns that would help us to conceptualize what kind of architecture we wanted to see. Our main goal was to make it testable, composable, decoupled from main rails app and easy to reason about.

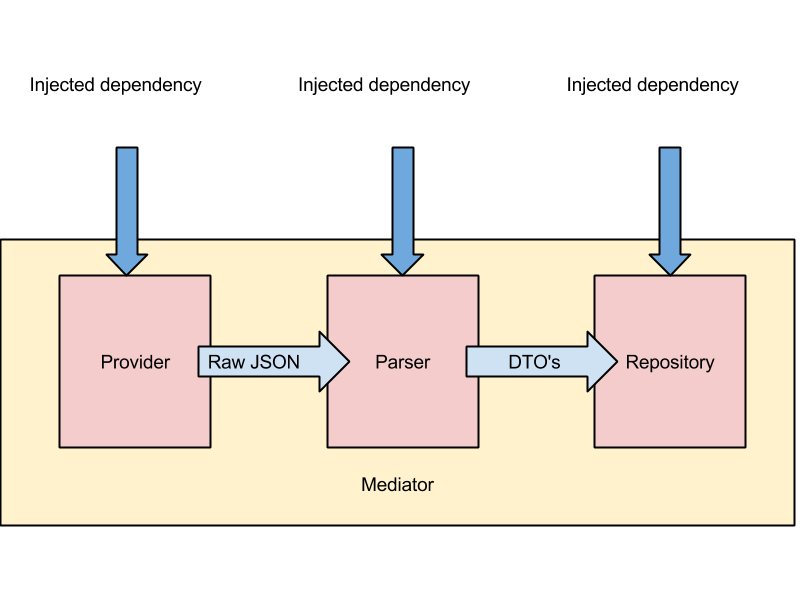

We chose to refactor the system in following way – All external gems that worked with API’s would be wrapped with Provider classes to make sure that the object interface is consistent across all data fetching libraries. Also, we made sure that the provider returns json parsed into hash.

After providers were made and unit tested, we switched our attention to data unification. We choose to call these objects parsers. Parser would take data from a specific provider and parse it to format acceptable to us. If we would go with ActiveRecord model unpersisted instances, that would not give us decoupling from Rails. We chose to use simple Virtus.model that could later be persisted into the database.

Once we made sure that we got the data we needed, we would require a mechanism to store this data. Again, looking at the patterns, we choose to use repository pattern, which would take DTO’s and take care of persistence of that data. Also, we needed to ask questions of the system somehow. For example, we did not want to synchronize data for 30 days if the user already had synchronized once or if he had data from two days ago, in that case we would just launch a synchronization only for two days with last sync date included, because user might have data that is not full for that day. At that point in time, it seemed natural to put this code in the repository as well. All interactions with the database happened with help of repository.

Now that we got everything set up, we needed a way to build these objects and make interactions between them to happen. We choose the mediator pattern. This would take care of all interactions between repository, parsers and providers. By injecting these dependencies into the mediator, we were able to unit test the whole system and to keep integration test coverage.

This architecture proved to be very useful to us because when Active Record was not sufficiently fast enough to do the persistence part, we swapped it out with regular SQL queries and interface of repository did not change for a bit.

Another benefit, which was unexpected, was the fact that we had to build new integrations without having the ability to test against their API. We only had documentation and deal of using this API was in the signing process. We were able to build the system only based on the documentation without making real requests. When the time came, it was trivial to replace missing bits with actually functional code.

The main takeaway for this article is that you should not be afraid to invest in good architecture, it will pay off further down the road. Also, consider the fact that the process of architecture is not something you do one time and forget about. It should be something that evolves as your business is changing.

Don’t write code – Solve problems.