As a developer, sometimes you need to do server maintenance, manage deployments and figure out the architecture from a server standpoint.

In the Ruby world usually, people tend to go for Capistrano, an application server and a database server. Sometimes DB server and App server are located on one machine. It might be fine for a small app, but when we need to add another server, you would need to install all its dependencies, set up an app and put some kind of a load balancer in front of both servers, so that incoming requests are sent to both machines. It is a tedious job and certainly, this work does not allow us to move any faster.

Of course, you can always go for something like Heroku, but sometimes it is just not an option.

In this article, I will give a high-level overview of how we have set up our servers with the help of Kubernetes and Docker and why we have chosen such a combination. This article will serve as a reference point for DevOps people who will be maintaining a project.

Why docker?

Docker allows to set up the image and forget about the dependencies. The only thing that we need to worry about is building an image, making sure it runs and pushing it to the repository. If it runs on your local machine, then it will run on your server.

Container orchestration

One problem what I have faced is how to orchestrate the containers and how to manage the deployments. There were few solutions that popped up – Docker Swarm, Kubernetes and Amazon’s Container service.

Since our current infrastructure was located on the AWS platform, we wanted to try to use the Amazons Container service. The first issue I ran into was the fact that I needed to collect logs in one place. Interestingly enough Docker has a driver to use with AWS CloudWatch but the Container service does not support that. There has been a support ticket to resolve that, but it seemed that it was taking them ages to implement. I was able to deploy my app, but I was not happy with the experience.

I did some comparison articles between Kubernetes and Docker Swarm and it seemed like Kubernetes would be more mature and the ideas behind it were more battle tested. So the next thing we tried to do was to launch a Kubernetes infrastructure on AWS. There was a pretty straight forward installer to do it. It worked okay, but Kubernetes required a machine (Kubernetes master) that orchestrated the containers. Not to mention that all the updates would need to be handled by us.

There was an option to use Kubernetes with Google Cloud Platform and we went with it since it hosted Kubernetes master and we could do updates pretty easily.

The platform costs are very minimal since you only pay only for the machines that you use. There is no overhead in case of the Google Cloud Platform.

The infrastructure and the app

We have two apps that work together. The administration and user panel are written in Node.js and the chat room is written in Elixir. Both of them use the same database and Node app uses Redis for session storage and other non-critical information.

Apps need to communicate with each other and obviously with the database. The App has to be load-balanced, but it has to persist the connection because chat uses WebSockets.

Kubernetes terminology

One thing that might confuse a lot of people from the beginning is the terminology and understanding what goes where.

Pods – It can be one or more Docker containers that should work as a group. They should start and stop as a group. If there are multiple containers, they can reach each other via localhost. Most of the times it will be a single container within a pod.

Deployments – Deployments are objects that allow you to define the way you want to deploy your app. There are multiple strategies that you can use. These objects also contain within themselves ReplicaSets.

ReplicaSet – This is a definition of how many and what kind of pods should be running at any given time. If a pod goes down, it is a job of ReplicaSet to bring it up again.

Services – You put a service in front of anything that needs to communicate with the outside world or within the cluster. If you create a service that can be accessed from the outside, depending on where your cluster is located, it will either assign a domain name (AWS) or an IP address (Google cloud). If a service needs to be accessed within the cluster, Kubernetes will give it an internal DNS address based on the name you give this service. It also acts as a load balancer. The way it finds the pods that it is supposed to route to is by tags.

Nodes – Nodes are the actual VM’s that are running pods.

Since Kubernetes has it’s own internal network, the communication between the pods can be set up really easily. For example, if you have an API service that is called API-server, you can access it via http://api-server from other containers. It is better than using environment variables to pass information about other containers’ location since containers might get new addresses or they even might get spawned on other machines. And the order of execution container spawning is not relevant.

Another advantage of Kubernetes is that it has the concept of Pods. Generally, it is not a good idea to have multiple services running within one container. Just think about the way you will handle logs. Kubernetes solves this problem by creating another abstraction, that can contain multiple containers and those containers can be started and stopped as a unit.

Big picture

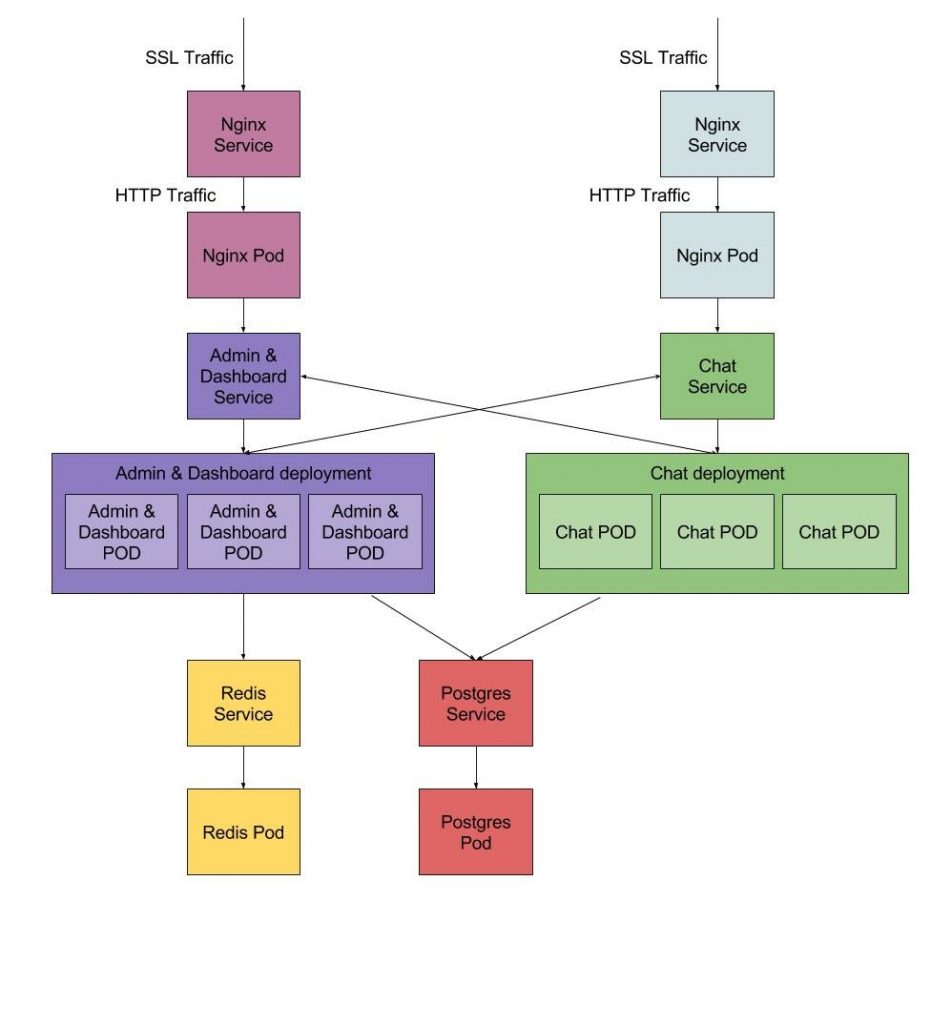

When traffic comes in, we have an NGINX service that routes traffic to NGINX pod, which in then decrypts SSL traffic into a regular HTTP traffic. We used Google’s NGINX ssl proxy image and adjusted it to work with web sockets. The result can be seen here.

The sole goal of NGINX here is to decrypt traffic and send it forward.

Then NGINX forwards the traffic either to admin & dashboard service or to chat service, which in turn routes the traffic to one of the pods that the Deployment’s ReplicaSet controls. Both chat and admin communicate with each other through services and both use Postgres service to work with Postgres pod. Admin uses Redis service to work with Redis database.

Note that we do not talk about VM’s (Nodes) in this article and that is irrelevant because the resources available are treated as a resource pool.

Redis, Nginx, Postgres pods are controlled by their own ReplicaSets or ReplicationControllers (Older version of ReplicaSet).

Conclusions

Kubernetes allowed us to move much faster once it was set up. It has quite a steep learning curve, but it is worth it. In practice, it takes several days to familiarize developers with the workflow of Kubernetes. Being familiar with docker helps a lot. If you want to become a good DevOps engineer, you need to learn about containerized architecture.

Hope you enjoyed this high-level overview of our Kubernetes deployment. If you have any comments, please, feel free to share them!